In our latest research, published in Computers & Security, we investigate how developers select and manage passwords compared to regular users, with a focus on the risks posed by hard-coded credentials in source code. Analyzing over 2 million secrets and passwords from public GitHub repositories, we found that while developers typically choose more complex passwords, they sometimes opt for weaker ones when the context allows, exposing significant security vulnerabilities.

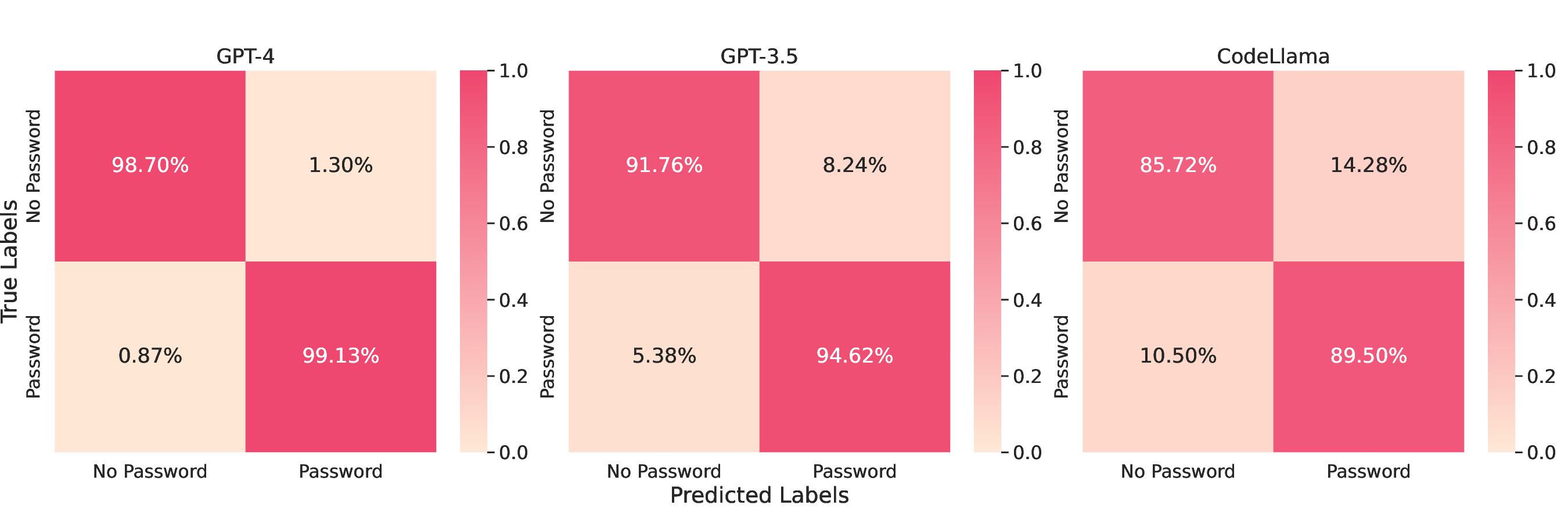

We also explored the potential of Large Language Models in detecting these vulnerabilities. Our findings show that LLMs can outperform traditional methods in identifying hard-coded credentials by understanding the context in which passwords appear, making them a valuable tool for enhancing security in the software development process.

Confusion matrices showing LLM performance on hardcoded credential indentification.

For an in-depth look at our methodology and detailed results, read the full paper (open-access):